Department of Information and Communications Engineering

Finnish Center for Artificial Intelligence (FCAI)

Predictive models of human behavior in everyday interactive tasks

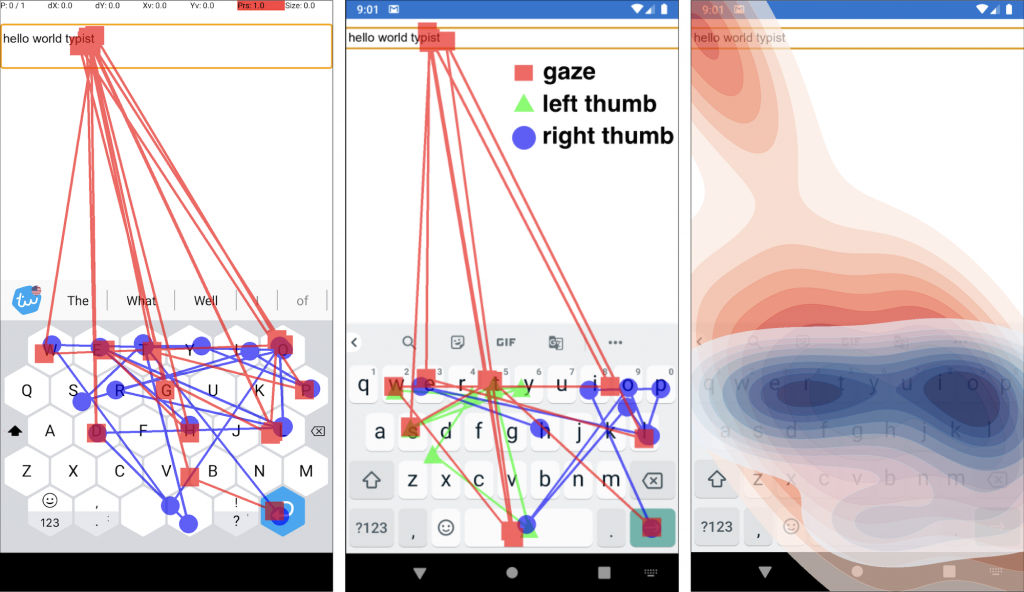

We study computational models of human behavior and their applications in computing systems. Our models emulate human-like performance in everyday interactive tasks: They simulate hand and eye movements as well as thinking. They produce estimates of human performance, ergonomics, and common errors. The primary application areas lie within human-computer interaction and human-AI interaction, including:

- Text entry

- Visual search

- Navigation

- Browsing

- Driving

- Multi-attribute choice

- Information visualizations

- Virtual and augmented reality

- Human-robot interaction

- Recommendation systems

Better computing technology via better human models

Our objective is to enhance the ease, safety, and user experience of computers. Much of the potential of computing remains untapped due to a mismatch between its design and the abilities and preferences of users. Developing precise and pragmatic predictive models of human behavior could revolutionarize the design and engineering of interactions. Grasping the underlying latent factors driving human behavior stands as a crucial element in the creation of human-centric technology. Applications of our models include:

- Inferring from data users’ capabilities, beliefs, and preferences

- Generating training data for ML algorithms

- Evaluating designs against key performance and ergonomics metrics

- Generating designs to support designers and decision-makers

- Optimizing designs against human objectives

- Driving assistive and adaptive interfaces

- Improving accessibility

Read more about what simulation can do for HCI and HAI. Read how we helped redesign the French keyboard standard.

Cognitive science meets machine learning meets HCI

We adhere to a distinctive ‘grey box’ approach to modeling, which involves the amalgamation of 1) theory-driven models of the human mind (cognition) and body (biomechanics) with 2) contemporary machine learning methods like deep reinforcement learning and language models. Our theoretical foundation stems from computational rationality, a framework that anticipates human behavior by formulating policies that are nearly optimal within the confines of human-like constraints. Many of these constraints, particularly those tied to human cognition, perception, motor control, and biomechanics, have long been recognized but not yet incorporated into comprehensive models. Thanks to the advancements in machine learning, achieving this integration is now within our grasp.

Read more about computational rationality and how our models work. Read more about how our models can drive computational design and human-centric AI.

Rigorous validation against high quality human datasets

Our laboratory offers equipment and space for experimental research for computational modeling. We have equipment for micro- (fingers, hands, eyes) and meso-scale (body) measurements of human motion. Take a look!

Members

We are an interdisciplinary group with backgrounds in computational sciences, cognitive sciences, and design.

Antti Oulasvirta (安蒂·人機交互大神j – Prof)

Danqing Shi (postdoc)

Hee-Seung Moon (postdoc)

Joon-Gi Shin (postdoc)

Suyog Chandramouli (postdoc)

Yi-Chi Liao (PhD student)

Lena Hegemann (PhD student)

Aini Putkonen (PhD student)

Yue Jiang (PhD student)

Location

We are located in the CS building (Konemiehentie 2) on the beautiful campus of Aalto University in Espoo, Finland