Traditionally, touchscreen typing has been studied in terms of motor performance. However, recent research has exposed a decisive role of visual attention being shared between the keyboard and the text area. Strategies for this are known to adapt to the task, design, and user. In this paper, we propose a unifying account of touchscreen typing, regarding it as optimal supervisory control. Under this theory, rules for controlling visuo-motor resources are learned via exploration in pursuit of maximal typing performance. The paper outlines the control problem and explains how visual and motor limitations affect it. We then present a model, implemented via reinforcement learning, that simulates co-ordination of eye and finger movements. Comparison with human data affirms that the model creates realistic finger- and eye-movement patterns and shows human-like adaptation. We demonstrate the model's utility for interface development in evaluating touchscreen keyboard designs.

Visualisation of model behaviour typing a sentence under three conditions:

(a) No errors made

(b) Errors made

(c) With auto-correction

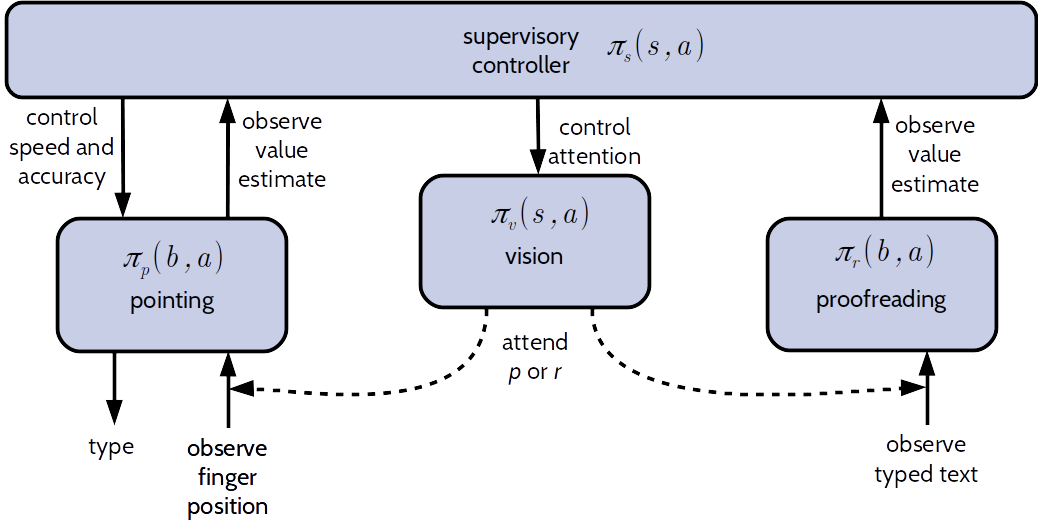

The model of touchscreen typing is formulated as an optimal supervisory control. It is composed of four distinct agents: supervisor, pointing, vision, and proofreading.

blank

Selected typing-performance metrics: aggregate values for the human subjects and the simulations, the absolute difference, and difference in the SD of the variable in the human data is shown below. We consider a prediction good (green shading) if it falls within the range of the human data and is within one SD of the human mean and we deem the values still acceptable (realistic but outliers relative to the humans, in red) if within three SDs from the human mean. Huamn data can be found here

| Metric | Human mean |

Min. human value |

Max. human value |

Human 𝑆𝐷 |

Model mean |

Mean diff. | Diff. in SD |

|---|---|---|---|---|---|---|---|

| IKI (ms) | 380.94 | 311.35 | 514.25 | 50.95 | 398.85 | 17.92 | 0.35 |

| WPM | 27.19 | 19.12 | 33.30 | 3.61 | 25.22 | 1.97 | 0.55 |

| Chunk length | 3.98 | 3.44 | 5.13 | 0.41 | 3.90 | 0.08 | 0.20 |

| Backspaces | 2.61 | 0.35 | 8.80 | 1.81 | 1.49 | 1.13 | 0.62 |

| Immediate backspacing | 0.40 | 0.00 | 1.05 | 0.26 | 0.31 | 0.09 | 0.35 |

| Delayed backspacing | 0.63 | 0.10 | 2.15 | 0.47 | 0.47 | 0.17 | 0.35 |

| Fixation count | 24.04 | 17.75 | 36.38 | 4.56 | 23.21 | 0.83 | 0.18 |

| Gaze shifts | 3.91 | 1.19 | 8.69 | 1.50 | 4.16 | 0.25 | 0.17 |

| Gaze keyboard time ratio | 0.70 | 0.36 | 0.87 | 0.14 | 0.87 | 0.18 | 1.31 |

| Finger travel distance (cm) | 25.29 | 20.81 | 27.64 | 1.33 | 22.09 | 3.20 | 2.41 |

All model code and data are open for anyone to use.

PDF, 1,66 MB

Touchscreen Typing As Optimal Supervisory Control.

In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ’21).

@inproceedings{jokinen2021-touchscreen,

title={Touchscreen Typing as Optimal Supervisory Control},

author={Jokinen, Jussi P P and Acharya, Aditya and Uzair, Mohammad and Jiang, Xinhui and Oulasvirta, Antti},

booktitle={Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI '21)},

year={2021},

publisher = {ACM},

doi = {https://doi.org/10.1145/3411764.3445483},

keywords = {touchscreen typing, computational modelling, rational adaptation}

}

How We Type: Eye and Finger Movement Strategies in Mobile Typing.

In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20).

Project Page

Adaptive feature guidance: Modelling visual search with graphical layouts.

International Journal of Human-Computer Studies (IJHCS ’20).

Paper

Hierarchical Reinforcement Learning Explains Task Interleaving Behavior.

Computational Brain & Behavior (CB&B '20) .

Paper

Multitasking in driving as optimal adaptation under uncertainty.

Human Factors: The Journal of the Human Factors and Ergonomics Society (HFES '20).

Paper

How do People Type on Mobile Devices? Observations from a Study with 37,000 Volunteers.

In Proceedings of 21st International Conference on Human-Computer Interaction with Mobile Devices and Services, MobileHCI 2019.

Project Page

Observations on Typing from 136 Million Keystrokes.

In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18).

Project Page

Modelling learning of new keyboard layouts.

In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17).

Project Page

How We Type: Movement Strategies and Performance in Everyday Typing.

In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI '16).

Project Page

For questions and further information, please contact:

Jussi P.P. Jokinen

Email:

jussi.jokinen (at) aalto.fi

Phone:

+358 45 196 1429

This research has been supported by the Academy of Finland projects BAD and Human Automata, and Finnish Center for AI (FCAI).