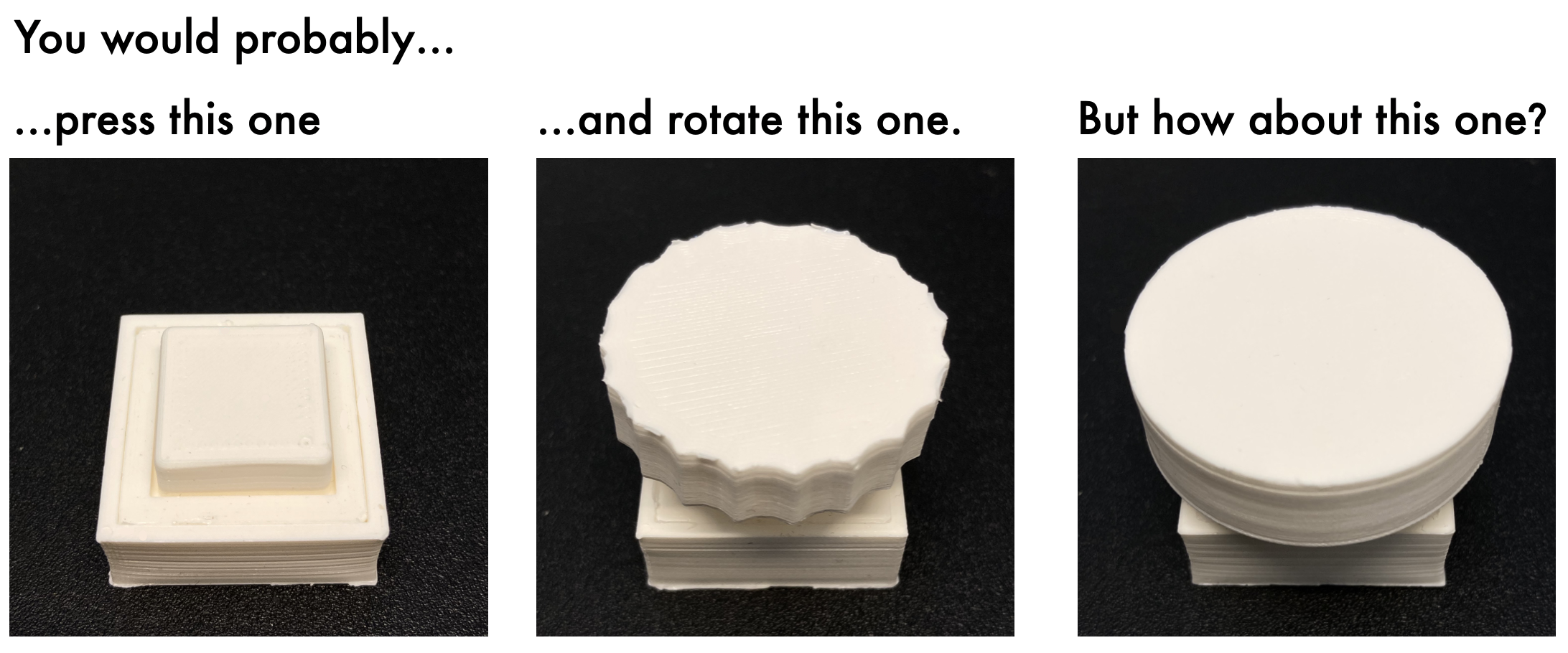

Affordance refers to the perception of possible actions allowed by an object. Despite its relevance to human-computer interaction, no existing theory explains the mechanisms that underpin affordance-formation; that is, how affordances are discovered and adapted via interaction. We propose an integrative theory of affordance-formation based on the theory of reinforcement learning in cognitive sciences. The key assumption is that users learn to associate promising motor actions to percepts via experience when reinforcement signals (success/failure) are present. They also learn to categorize actions (e.g., "rotating" a dial), giving them the ability to name and reason about affordance. Upon encountering novel widgets, their ability to generalize these actions determines their ability to perceive affordances. We implement this theory in a virtual robot model, which demonstrates human-like adaptation of affordance in interactive widgets tasks. While its predictions align with trends in human data, humans are able to adapt affordances faster, suggesting the existence of additional mechanisms.

Watch the eight-minute presentation video to learn more:

30 demonstration of the model:

Our implementation (in Python) of RL model and the mujoco environment are released.

PDF, 2.9 MB

Rediscovering Affordance: A Reinforcement Learning Perspective

In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems (CHI ’22).

@inproceedings{investigating_chan,

address = {New York, NY, USA},

title = {Rediscovering Affordance: A Reinforcement Learning Perspective},

url = {https://doi.org/10.1145/3491102.3501992},

doi = {10.1145/3491102.3501992},

booktitle = {Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems},

author = {Liao, Yi-Chi and Todi, Kashyap and Acharya, Aditya and Keurulainen, Antti and Howes, Andrew and Oulasvirta, Antti},

year = {2022},

}

For questions and further information, please contact:

Yi-Chi Liao

Email:

yi-chi (at) aalto.fi

Acknowledgements:

This project is funded by the Department of Communications and Networking (Aalto University), Finnish Center for Artificial Intel- ligence (FCAI), Academy of Finland projects Human Automata (Project ID: 328813) and BAD (Project ID: 318559), and HumaneAI. We thank John Dudley for his support with data visualization and all study participants for their time commitment and valuable insights.